Best Practices in building Nemo Land Kingdom

By

ChicMic Studios

December 1, 2022

In this article, we will go over the best practices that were used while building Nemo Land Kingdom.

Nemo Land Kingdom is a virtual kingdom where users buy digital pieces of land in the HZM Metaverse or HZM Digital Universe. It is a web3 application or DApp built on top of Ethereum blockchain. It uses ERC721 standard for the land trading performed on the platform. It is similar to ‘The Sandbox’ metaverse but instead of 96000 lands, it has 4 Million+ lands and that too displayed at lightning speed.

Securing Transactions with Signatures

While building a DApp, the question as to what to keep on-chain and what to keep off-chain is often perplexing. In our case, we could not store the complete data for all the 4 Million+ lands on the blockchain. We came up with a solution in which we delegated the authority to another account called “The Signer” which was responsible for providing a signature which was validated in the smart contract before minting the land.

Clustering in Node.js

Node.js inherently just uses one core as it runs the JavaScript code on just one thread by default. We made sure that we are using the potential of the machine by utilising all the cores of the CPUs by implementing cluster architecture.

Node.js inherently just uses one core as it runs the JavaScript code on just one thread by default. We made sure that we are using the potential of the machine by utilising all the cores of the CPUs by implementing cluster architecture.

DB Profiling

MongoDb Profiler helps us to monitor all the queries being made to the database. We can analyse the queries and apply different techniques to improve those queries which are made frequently and are not fast enough.

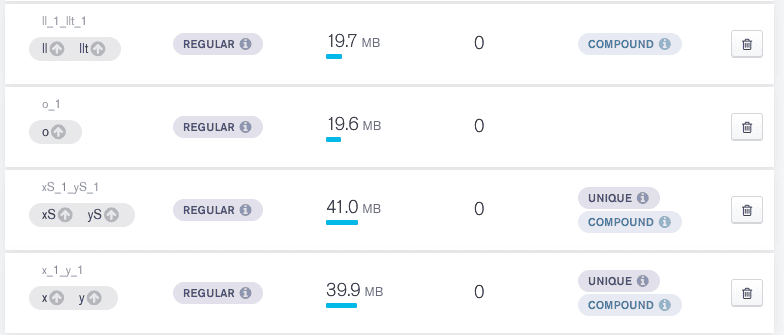

Indexing

After identifying the queries that need optimization, we analysed the keys which when indexed will improve the query execution time.

From the data provided by the profiler, we identified the APIs that require further optimizations. For instance, the land information API was being called persistently and it made use of x and y coordinates for fetching the data. After indexing these keys there was drastic improvement in the response times.

Avoiding use of loops

More often than not efficiency takes a hit whenever loops are used. We minimised the use of loops by using suitable data structures like Hash maps to keep time complexity in check. Not only Hash maps, we used appropriate data structures wherever they were necessary.

Optimising DB Queries

Where and how we query the database has a significant effect on the speed of the query/API. We applied a caching layer at the places necessary and saved unnecessary trips to the DB. And also kept the cache up to date by refreshing it whenever the related API was called without letting the refresh process slow down the response time.

Using $facet responsibly

$facet can help us reduce the response time in many cases but it’s not necessarily always the case. Common thinking is that one query is better than two, but this is not always the case. In our case when we used $facet to merge a list and count query, the response time was 2 seconds and when we separated the query to response came down 100ms.

Using Asynchronization Extensively

We avoided using await as much as possible to take full benefit of the asynchronous nature of Node.js. For instance, we asynchronously updated the data in the cache whenever an API call was made which kept the API fast while making sure that the cache is not stale.

Our tile rendering engine also works asynchronously giving us best performance even when working under load.

Caching

We used the Redis Cache Layer to store information regarding lands, zones and other critical information. Redis gave us double digit millisecond response time.

As the lands were constantly queried it was necessary to cache the data for great user experience while using the Nemo Land Kingdom platform.

Optimising API response

All the points discussed above helped us in making the APIs extremely efficient even when subject to high load. Apart from caching and other optimization, we also made sure that the browser was able to handle the large amount of data that was being queried.

Reducing Size of JSON keys

For this we reduced the size of JSON keys which made the response object smaller and therefore, easier to process by client. For instance, availableToSale became ats, thus reducing the size of the json response.

Syncing DB with Blockchain Events

In order to reflect the transactional changes on the front end in real-time we could not rely on Cron service for syncing our database with the blockchain. For example, if a land was bought, we would have to wait for Cron service to verify that transaction and then call the rendering engine in order to update the tile.

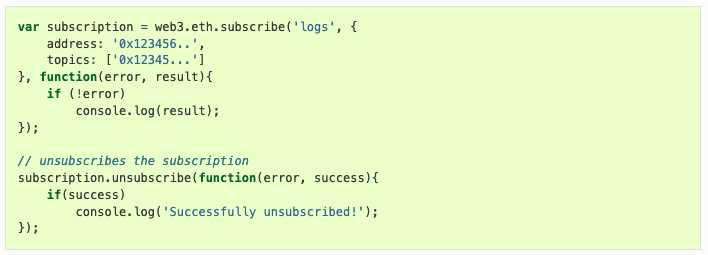

To make this better, we moved to blockchain sockets. We added listeners which constantly listened to transactions being sent to our contract and kept our database in sync with the help of transaction logs.

Managing Failures

While handling the transactional data and also showing it on the platform in real time we had to make sure that all the errors and failures were well handled as missing even one transaction can be ruinous and can result in the user losing his/her land.

For handling this, we maintained all the failed processes in the database and handled them using a Cron service until all the processes were resolved. He help of transaction logs.